Fine-tuned Embedding & Retriever Model

We leverage fine-tuned, domain-specific models to understand automotive context, so you can retrieve precise, relevant data with high accuracy.

The fine-tuned Embedding & Retriever Model is at the core of Predii's ability to perform precise, context-aware search in automotive repair data. This model is tailored to understand the unique language and relationships found in automotive service and parts data, ensuring that relevant information is retrieved with high accuracy.

This capability enhances the ability to search large volumes of automotive repair data quickly and accurately. It significantly reduces the time spent filtering through irrelevant data, improving efficiency for technicians and parts lookup. The model’s domain-specific tuning ensures that it is highly effective in automotive contexts, where standard search methods may struggle.

Our models are trained to understand contextual nuances specific to automotive repair, i.e. "oxygen" and "air".

Our models use specialized prompt engineering and fine-tuning layers based on deep domain experience in parts & service to ensure that domain-specific terminology is understood accurately and can complex queries are handled reliably.

Vectorization

The model converts textual data (e.g., repair procedures, part names) into vectorized representations, allowing it to compare and match similar terms, even if they are phrased differently.

Predii uses specialized embedding techniques to generate semantic embeddings for automotive terminology, improving search accuracy by capturing the domain-specific meaning behind terms, not just keyword matches.

Data Generation

Predii generates synthetic, parts & service domain-specific data, i.e. manuals, repair orders, part catalog data, end more to specifically train and fine-tune our models.

Efficient Retrieval

Vectorized retrieval enables semantic search, where the model can identify the most relevant results based on the meaning of the query, not just the exact keywords.

Similarity Measurements and distance metrics compare the vector representations of queries against the vectorized data, returning the most contextually relevant results.

RAG Integration

Retrieval-Augmented Generation (RAG) improves the quality of the search results.

A fine-tuned retrieval model fetches relevant data snippets from indexed automotive repair data based on complex queries.

A generative model outputs results as structured, actionable responses with contextually accurate, detailed answers rather than simply returning most similar results.

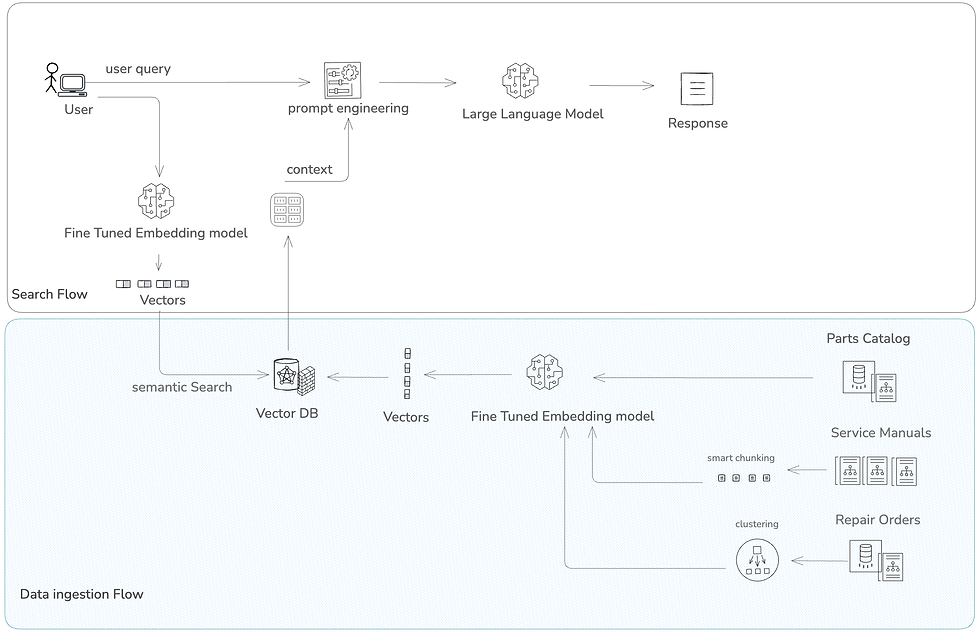

This diagram illustrates how Predii processes automotive queries using a two-part system: (1) the data ingestion flow, where structured and unstructured content like parts catalogs, service manuals, and repair orders are embedded using fine-tuned models and stored in a vector database; and (2) the search flow, where a user query is parsed, embedded, and semantically matched against the vector DB. Relevant context is retrieved and used to engineer prompts for a large language model, generating precise, context-aware responses for parts and service scenarios.